101. Introducing parallel computations with arrays

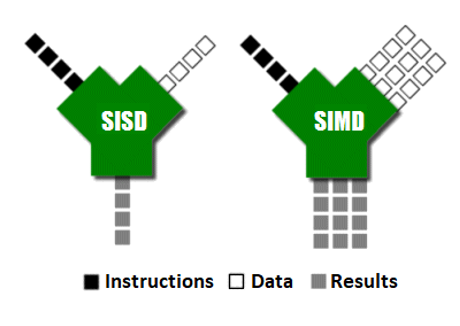

There was a time when CPUs were capable to perform operations on data only in the traditional mode known as SISD (Single Instruction, Single Data) or von Neumann architecture. In other words, one CPU cycle can process a single instruction and a single data. The processor applies that instruction to that data and returns a result.Modern CPUs are capable to perform parallel computations and work in a mode known as SIMD (Single Instruction, Multiple Data). This time, one CPU cycle can apply a single instruction on multiple data simultaneously, which theoretically should speed up things and improve performance. The following diagram highlights these statements:

Figure 5.1 – SISD vs. SIMD

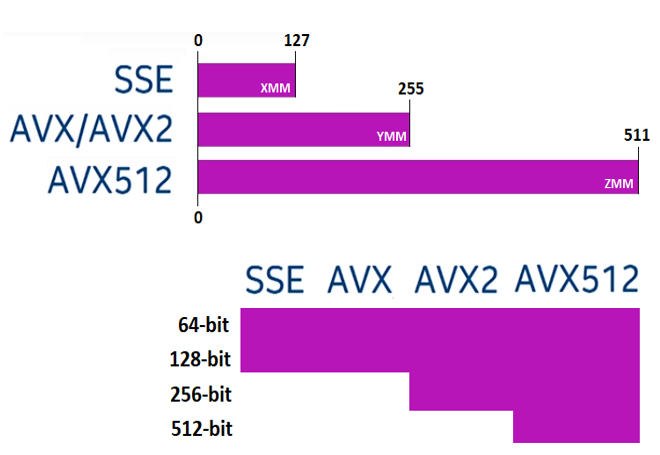

If we add two arrays X and Y via a SISD-based CPU then we expect that each CPU cycle will add an element from X with an element from Y. If we do the same task on a SIMD-based CPU then each CPU cycle will simultaneously perform the addition on chucks from X and Y. This means that a SIMD CPU should complete our task faster than the SISD CPU.This is the big picture! When we come closer, we see that CPU architectures come in many flavors, so is quite challenging to develop an application capable to leverage the best performance of a specific platform.The two big competitors on the market, Intel and AMD, come with different SIMD implementations. Is not our goal to dissect this topic in detail, but it can be useful to know that the first popular desktop SIMD was introduced in 1996 by Intel under the name MMX (x86 architecture). In response, the AIM alliance (Apple, IBM, Freescale Semiconductor) has promoted AltiVec – an integer and single-precision floating-point SIMD implementation. Later on, in 1999 Intel introduced the new SSE system (using 128-bit registers). Since then, SIMD evolved via extensions such as Advanced Vector Extensions (AVX, AVX2 (256-bit registers), and AVX-512 (512-bit registers)). While AVX and AVX2 are supported by Intel and AMD, the AVX-512 introduced in 2022 is supported only by the latest Intel processors. Hopefully, the following figure can bring some order here:

Figure 5.2 – SIMD implementation history

Figure 5.2 is just the SIMD representation of a CPU structure. In reality, the platforms are much more complex and come in many flavors. There is no silver bullet, so each platform has its strong and weak points. Trying to explore the strong points and avoid the weaknesses is a real challenge for any programming language trying to leverage the performance of a specific platform at high expectations.For instance, what is the proper set of instructions that JVM should generate in order to squeeze the performance of a specific platform for computations that involve vectors (arrays)? Well, starting with JDK 16 (JEP 338), Java provides an incubator module, jdk.incubator.vector which is known as the Vector API. The goal of this API is to allow developers to express in a very platform-agnostic way vector computations that are transformed at runtime in optimal vector hardware instructions on supported CPU architectures. Starting with JDK 19 (JEP 426), The Vector API reached the fourth incubator, so we can try out some examples that take advantage of data-parallel accelerated code in contrast to scalar implementation. But, before that, let’s cover the Vector API structure and terminology.